AI pilots fail to scale in midmarket companies when they lack business ownership, production-ready data and integrations, clear governance, and a 90-day path from proof of concept to value. Avoid “pilot purgatory” by tying use cases to processes, building a production runway early, and measuring ROI from day one.

Across midmarket organizations, promising AI proofs of concept get stuck right after the demo. Budgets stall, IT bandwidth is limited, and leaders hesitate to commit without clear ROI or risk controls. If you’ve wondered why do AI pilots fail to scale in midmarket companies, the answer usually isn’t model accuracy—it’s operating model readiness. In this guide, you’ll learn a pragmatic framework to escape pilot purgatory, build a production runway, and scale what works in 90 days. We’ll show how to align AI to business processes, design for governance from day one, and use an “AI workforce” approach to deliver results fast.

We’ll also share examples, questions to ask vendors, and a step-by-step plan you can start this week. Along the way, we’ll point to research from McKinsey, Gartner, and MIT Sloan Management Review, plus internal resources like AI assistants vs. AI agents vs. AI workers and AI workflows that build themselves to help you design for scale, not just demos.

The Pilot Purgatory Costing Midmarket Firms Momentum

Midmarket AI pilots stall when they’re tech-led experiments without business ownership, integration paths, or success metrics tied to P&L. Without a production runway, they remain demos that don’t change workflows, so value never compounds.

For many midmarket teams, pilots live in innovation labs, not in the processes where work happens. There’s no KPI linkage, no process change, and no plan for integration with CRM, ERP, or support platforms. According to RSM’s Middle Market AI Survey, adoption is high, but execution gaps persist—especially around data readiness and governance. The result is familiar: leadership fatigue, “try another pilot,” and minimal operational impact.

This is the core pattern behind most failed scale attempts: the pilot solves a slice of a problem but doesn’t own the end-to-end workflow. No ownership means no budget. No integration means no repeatability. And no repeatability means no scale.

Misaligned business goals and unclear ownership

When pilots originate from curiosity rather than a business objective, the success criteria are fuzzy. Who is accountable for outcomes—a line-of-business leader or IT? Without a single owner who controls process change and budget, AI remains a side project, not a lever for results.

Data and integration debt blocking production

Proofs of concept often run on curated samples. Scaling requires live data, identity resolution, permissions, and bidirectional integrations. If you can’t connect to systems of record or automate actions back into them, you’ll be stuck in “reporting AI,” not “doing AI.”

Undercooked governance, risk, and compliance

Security reviews, privacy controls, audit trails, and human-in-the-loop policies are table stakes to reach production. Midmarket firms with limited GRC resources postpone these conversations until too late. By then, momentum is gone.

Why the Pilot Trap Is Getting Worse

AI pilots are harder to scale today because vendor sprawl, rising governance expectations, and talent constraints increase operational overhead. Leaders need fewer, stronger bets with clear owners, not more experiments.

McKinsey’s guidance for CIOs emphasizes killing nonperforming pilots and doubling down on those with technical feasibility and clear business value. Meanwhile, a Gartner poll shows many organizations are still in pilot/early production, reflecting the widening gap between experimentation and scaled operations. Midmarket realities—lean IT, limited data engineering, and cross-team dependencies—intensify the challenge.

Vendor sprawl and shadow IT

Teams collect point solutions for chat, summarization, and analytics. Each tool works in isolation; none owns a process end-to-end. Integrations and support become the bottleneck, not the model’s capability.

Model risk and safety requirements are rising

As AI moves closer to customers and revenue, expectations for security, privacy, bias controls, and auditability increase. Without early alignment on governance, approvals can delay or halt deployment entirely.

Talent scarcity and IT bandwidth

Midmarket IT teams already run lean. Building and maintaining custom agents, RAG pipelines, and integrations for every use case isn’t feasible. You need an approach that lets business users lead without increasing IT burden.

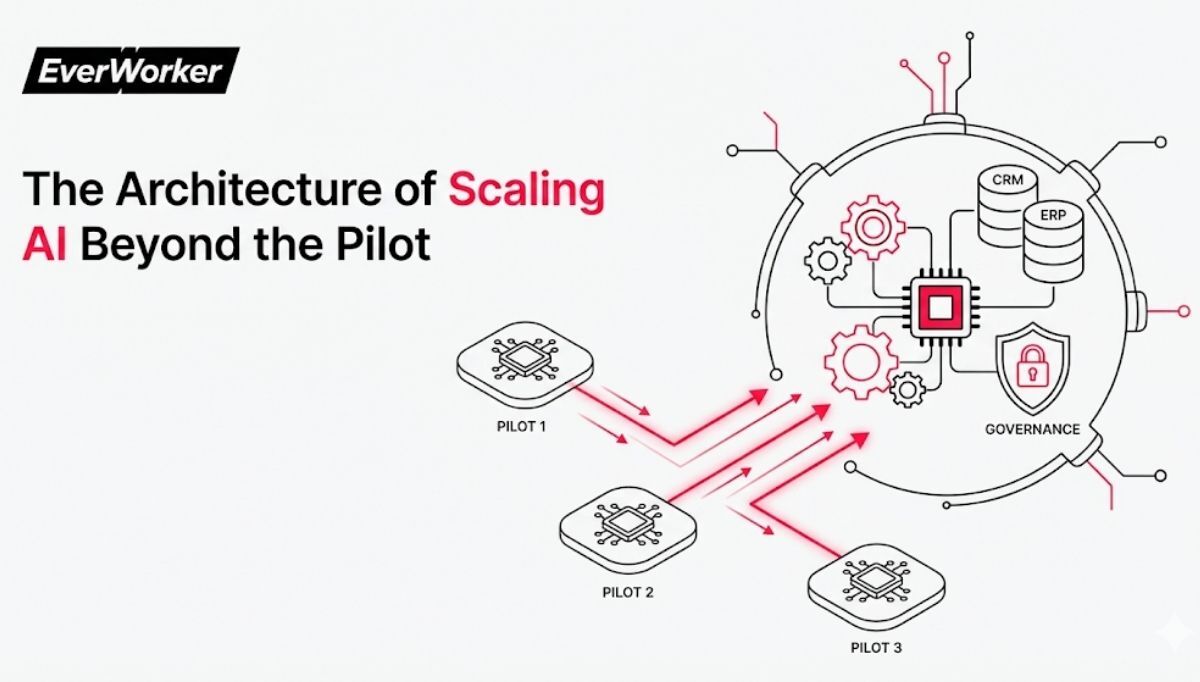

The Scale Architecture: From Use Case to Value Chain

Scaling AI means shifting from isolated use cases to owned value chains—end-to-end workflows that start with a business outcome, run on real data, act in core systems, and measure impact in financial terms.

The teams that break out of pilot purgatory design for scale from day one. They choose a high-volume, rules-driven process; define the desired outcome; and map each step from data access to system actions. They also identify the human touchpoints where approval or override is required. For guidance on picking the right automation form factor, see AI assistant vs. AI agent vs. AI worker.

Start with the business process, not the model

Define the target process (e.g., Tier‑1 support resolution, invoice matching, lead qualification), its inputs/outputs, SLAs, and success metrics. Select the model and tools that serve that process—not the other way around.

Build a production runway early

Secure data access, identity controls, and system integrations up front. Decide where the AI will take autonomous action and where a human reviews. Establish logging, audit trails, and rollback paths before go-live.

Prove ROI with a 90‑day scale path

Plan from pilot to phased rollout: shadow mode, human-in-the-loop, then autonomous for defined scenarios. Tie KPIs to business value—time-to-resolution, cost per ticket, DSO, conversion rate—so budget owners see impact quickly. For a concrete example in support, try our 90-day customer support playbook.

From Pilot to Production: 90-Day Plan

A simple three-phase sequence turns a successful POC into measurable, scaled impact without disrupting operations. Treat this as a template and adapt it to your function.

- Days 1–14: Baseline and shadow mode. Select one high-volume process. Document steps, inputs, and outputs. Connect read-only data and run the AI in shadow: it proposes outputs while humans do the work. Measure agreement rates and identify gaps.

- Days 15–45: Human-in-the-loop. Enable write actions in limited scope. The AI prepares the work, your people approve/reject. Track cycle time, error rate, and % of work requiring human edits. Tighten guardrails, add edge cases to knowledge.

- Days 46–90: Targeted autonomy + expansion. Move defined scenarios to autonomous execution with monitoring. Expand to adjacent steps in the same process. Publish weekly ROI: time saved, cost reduced, quality maintained or improved.

This approach keeps risk low, builds trust, and creates the evidence executives need to fund expansion. If you need help choosing the right form factor and workflow, our guide to AI workflows that build themselves explains how to design scalable automations.

From Tools to AI Workers: The Real Scale Shift

Most teams try to scale AI by adding point tools. But tools automate tasks; scale requires automating end-to-end processes. That’s the shift from “a bot in a channel” to “an AI worker” that owns outcomes across systems.

Traditional automation stacks require stitching scripts, connectors, and dashboards. They save minutes but rarely change the unit economics of your operation. An AI workforce approach treats AI as digital employees: specialized workers for discrete steps, orchestrated by a universal worker that understands your policies, data, and context. This maps to how your teams already work—and it’s why value multiplies instead of adding linearly.

This shift also changes who can lead. Instead of IT-led builds that take months, business users can describe work in natural language and deploy workers that operate inside existing systems. Governance isn’t an afterthought; it’s built in with role-based permissions, audit logs, and human oversight where required. The result is fewer pilots and faster production—aligned with the “conversation away” deployment philosophy we champion at EverWorker.

If you’ve been integrating point solutions and still can’t scale, it’s likely a paradigm issue: you’re optimizing tools, not processes. Move to AI workers that execute complete workflows, learn continuously, and deliver compounding ROI.

How EverWorker Solves Pilot Purgatory Instantly

EverWorker turns the 6–12 month journey from pilot to production into days and weeks by focusing on end-to-end processes, not isolated tasks. You describe the work; AI workers do it—inside your systems, with your data, under your governance.

Two platform capabilities make this possible. First, EverWorker Creator functions like an always-on AI engineering team. In a conversation, it turns your process description into a deployed worker—complete with testing, guardrails, and human-in-the-loop steps. Second, the Universal Connector provides instant interoperability. Upload an OpenAPI spec and EverWorker generates action capabilities across your CRM, ERP, support, or finance tools so workers can read and write where the work lives.

The impact is speed and certainty. Customers routinely stand up blueprint AI workers in hours, then customize and scale. We help you identify the top five highest-ROI use cases and put pilot versions in front of your team within days, with full production workers in about six weeks—eliminating the drawn-out implementation cycles that kill momentum. For background on our approach, see Create Powerful AI Workers in Minutes and our complete guide to AI customer service workforces.

Governance is built in: role-based permissions, audit trails, and human approvals where needed. Workers operate like your best employees—24/7, consistent, and continuously improving—so you’re not managing tools; you’re leading a capable AI workforce that compounds value over time.

Your Next Moves and Skill-Building Path

Put this article to work with focused steps that align to your goals and constraints.

- Immediate (This Week): Select one process with high volume and clear SLAs. Define owner, inputs/outputs, and success metrics. Baseline current cycle time and error rate.

- Short Term (2–4 Weeks): Connect read-only data and run shadow tests. Align governance requirements with Security/Legal and document human-in-the-loop points.

- Medium Term (30–60 Days): Turn on limited-scope write actions with approvals. Publish weekly ROI—time saved, cost reduced, quality maintained.

- Strategic (60–90+ Days): Expand autonomy for defined scenarios and add adjacent steps. Standardize a template so the next process can be deployed faster.

- Transformational: Shift from tools to AI workers that own outcomes across systems. Build an internal catalog of deployed workers and scale cross-functionally.

The fastest way to accelerate these steps is enabling your team with shared language and skills.

Your Team Becomes AI-First: EverWorker Academy offers AI Fundamentals, Advanced Concepts, Strategy, and Implementation certifications. Complete them in hours, not weeks. Your people transform from AI users to strategists to creators—building the organizational capability that turns AI from experiment to competitive advantage.

Immediate Impact, Efficient Scale: See Day 1 results through lower costs, increased revenue, and operational efficiency. Achieve ongoing value as you rapidly scale your AI workforce and drive true business transformation. Explore EverWorker Academy

Make Scale Your Default

The reason most midmarket AI pilots fail to scale isn’t the model—it’s the missing production runway, ownership, and governance. Design for end-to-end processes, not demos. Use shadow → human-in-the-loop → autonomy to build trust. And shift from tools to AI workers that deliver measurable outcomes. That’s how you escape pilot purgatory—fast.

.png?width=300&name=LinkedIn%20Native%20Articles%20(6).png)